Vivace

Live coding language for Web Audio API

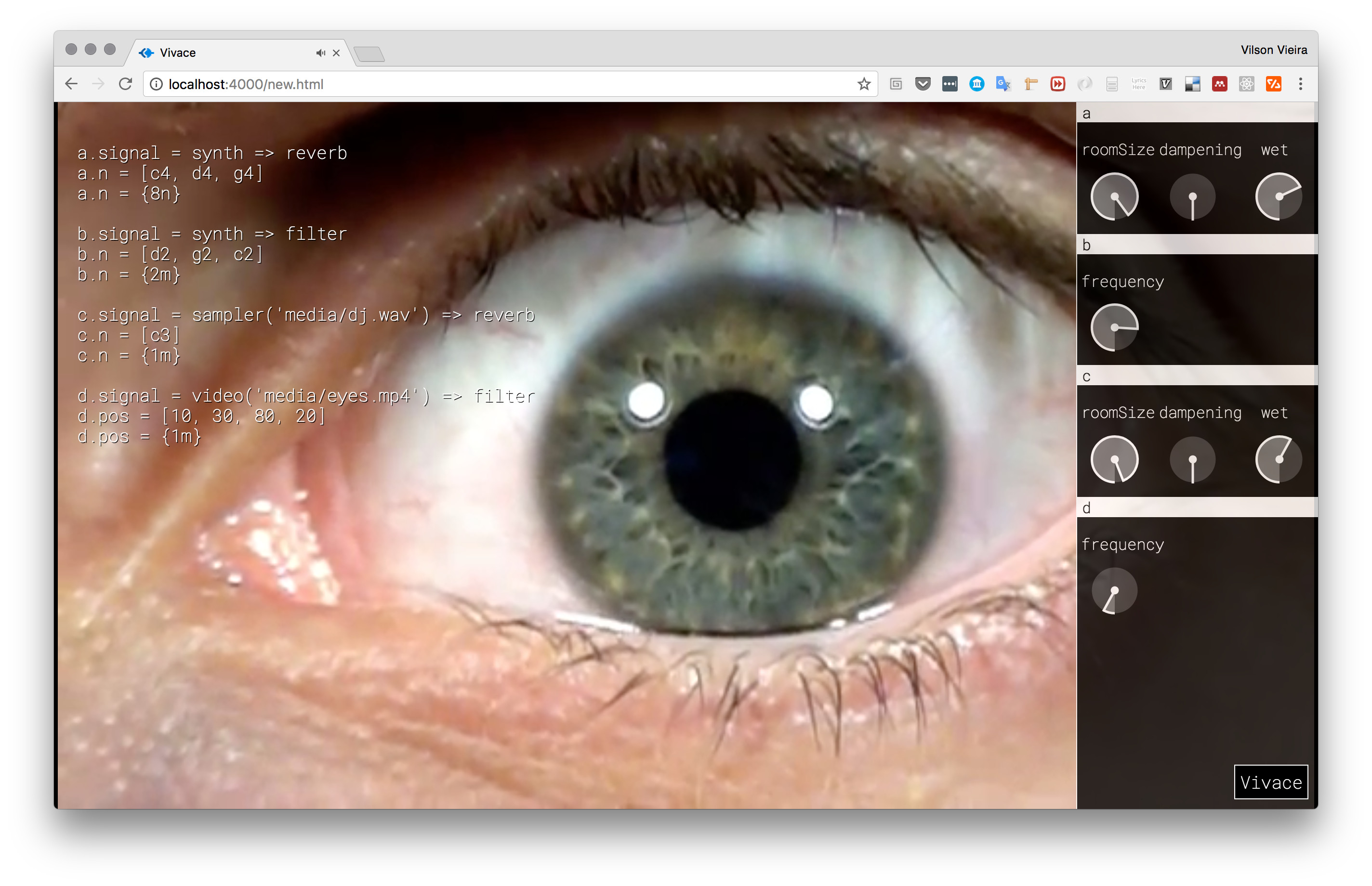

Vivace is a live coding DSL (Domain Specific Language) that runs on top of the Web Audio API for audio processing and HTML5 Video for video displaying and control. The language parser and lexer are built using Jison and Tone.js is the framework of choice to handle audio processing and event scheduling synced with Web Audio API’s internal clock.

Vivace aims to be easy to learn and use by providing a set of features:

- Simple syntax and grammar rules

- Runs on every popular browser, so newcomers to live coding can start practicing it right way

- Use native Web Audio API (abstracted by Tone.js), so it evolves together with the standards

- Mixes audio and video capabilities making it a Swiss knife for live playing of multimedia

- Full control of audio nodes and their parameters by language instructions or UI

OK, but what is live coding?

Live coding is an alternative way to compose and play music (or other media) in real-time. The performer/composer plays on a computer device and shows your screen to the public, making them part of the performance and at the same time demystifying what the musician is really doing to generate that weird sounds. Live coders commonly use general domain languages or create their own computer music languages.

The language

Vivace is based on a declarative paradigm: you tell which params you want to change from a voice; what their new values are going to be; and for how long the values are going last. That’s it.

For example, that’s a “Hello, world!” in Vivace:

a.signal = synth

a.notes = [d4, e4, c4]

a.notes = {1m}

Where a is the name of some voice. Every voice has a signal param

that defines voice’s timbre. In this example that’s a simple monophonic

synthesizer. Another default param is notes. Every param accepts two types

of lists: values and durations. In this case, the list of values [d4,

e4, c4] will be played in order every one measure, defined by the list of

durations [1m].

Try to type the example above and press Ctrl + Enter (Cmd + Enter or Alt + Enter

also works) to evaluate it. Also, try to

change the values and press Ctrl + Enter again and again. That’s the essence of

live coding: you’re manipulating media on-the-fly.

Voices

A voice can be any name, like a, b, foo, drum303. You don’t need to

declare a voice, just use it while defining a signal and it will be

automatically created.

Signals

Every voice has a default parameter that must be set before anything else:

the signal. You can’t play a voice without having an instrument, right?

Just define a signal using the chain operator => and the names of the

audio nodes you want to chain together:

a.signal = synth => filter => reverb

In this example you are creating a monophonic synthesizer, plugging it to a filter and then to a reverb. You can try other chains like:

a.signal = sampler('media/bass.wav') => reverb

b.signal = video('media/eyes.mp4') => filter

Note that some audio nodes in a chain can have parameters like the name of an

audio file to be used on sampler.

Parameters: values, notes and durations

After you have your signal defined for you voice, you are free to automate every parameter of audio nodes that are part of the chain/signal. Just point the name of the audio node and the name of the parameter you want to change its values:

a.filter.frequency = [300, 450]

a.filter.frequency = {4n, 8n}

In this example we are changing the frequency of the filter audio node. We

do that using values and durations lists:

-

Values are lists delimited by

[and]and their values can be numbers (for frequencies in Hz, or seconds for video positions, etc), musical notes innote octaveformat likec4org2, and even degrees into a music scale likeii, iii, iv. -

Durations are lists delimited by

{and}and their values can be numbers (for seconds) and time expressions relative to BPM and time signatures like1m(one whole measure),4n(one quarter note) or8t(an eighth note).

Using scales

Instead of specifying individual notes, it’s posible to define a scale for a voice and then use degrees to play notes into the scale:

a.sig = synth

a.root = c4

a.scale = minor

a.notes = [i, iii, v]

a.notes = {4n}

It’s convenient to specify the root of the scale to make degrees relative to it. Scale degrees can be specified using Roman numbers (e.g. i, ii, iii, etc) or integers:

a.sig = synth

a.root = e4

a.scale = minor

a.notes = [1, 3, 5]

a.notes = {4n}

If only a root note is specified without an scale, the list of notes becomes a list of semitones defining a sequence of intervals from the root note. For example, the following voice has as notes the 0, 1 and 2 semitones of distance from E4 note, or in other words, this voice will play the unison, minor second and major second intervals of E4:

a.sig = synth

a.root = e4

a.notes = [0, 1, 2]

a.notes = {4n}

Vivace uses Teoria.js to handle scales. Those are the supported scales:

- chromatic

- harmonicchromatic

- major

- minor

- melodicminor

- harmonicminor

- doubleharmonic

- majorpentatonic

- minorpentatonic

- blues

- aeolian

- dorian

- ionian

- locrian

- lydian

- mixolydian

- phrygian

- flamenco

- wholetone

Controling videos

Videos can be manipulated by defining video as a node into a signal chain:

foo.signal = video('media/eyes.mp4')

foo.pos = [10, 20]

foo.pos = {8n}

Videos accept pos as a default parameter which specifies at what position (in seconds) video should start playing. It also expects a duration list, specifying the duration of it. Video playing is synced with Web Audio API’s internal clock, so both audio and video playing are in sync.

It is possible to route audio stream from videos through audio chains like we

do with any audio node. Just route it with the chain operator =>:

foo.signal = video('media/eyes.mp4') => reverb => filter

UI

To toggle UI controls, click on the Vivace banner on bottom right of the

screen or press Ctrl + ] (Cmd + ] and Alt + ] also works).

Click (or press Ctrl + ]) again whenever you want to hide/show it.

Everytime you create a new voice, a UI panel with controllers will be created on Vivace’s drawer (the panel on the right).

When you change some parameter of a voice using the UI, the automations previously defined for it are canceled, so you’ll be in manual control of the parameter by UI only. If you decide to evaluate the automations again, the control will be passed to the automations as expected.

Developing Vivace

To change grammar/lexer, change vivace.jison and vivace.jisonlex,

respectively, and:

npm install jison -g

jison vivace.jison vivace.jisonlex -o vivace.js